Robotics and Automation

| 1980 | 1990 | 2000 | 2010 |

| Emerging of PCs | Information superhighway | Human-center healthcare robotics |

| [Numerical] [Damping Identification] [Mechanism Design] [References] |

Biomechatronics

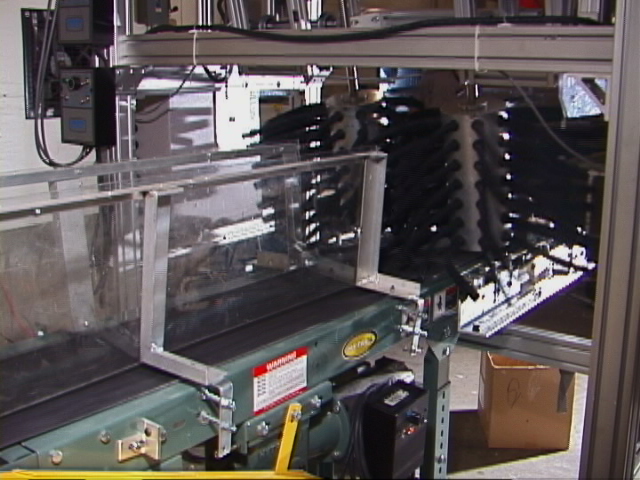

Flexible Part-feeding System for Machine Loading and Assembly

In the 1980s, the cost to feed parts to a robot for either machine loading or assembly in a flexible manufacturing system (FMS) has often been underestimated. Paper [1] provide a survey on part-feeding methods applicable to a board class of flexible manufacturing systems has indicated that part-feeding may comprise two-thirds of the overall investment and are usually the source of a large percentage of work stoppage and defects. To reduce the cost, the design concept of a fully integrated vision-guided part-feeding system has been developed [2]. The integrated system (designed to combine maximum flexibility and reliability with minimum cost and cycle-time) is a flexible computer-controlled system which takes maximum advantage of retro-reflective vision sensing [3] for feeding parts onto machine tools or assembly processes. Since the retro-reflective material has a distinct surface reflectance that is not commonly found in nature or man-made objects, it enables reliable digital images of high object-to-background contrast to be obtained without a prior knowledge of object geometry and surface reflectance. These attractive features enable the location and the orientation of the part to be determined with relatively simple, high-speed computation without the need for a detailed reflectance map of the parts to be handled.

Vision-based Robotic Pickup of Moving Objects

Paper [4] addresses the problem of picking up moving objects from a vibratory feeder with robotic hand-eye coordination. Since the dynamics of moving targets on the vibratory feeder are highly nonlinear and often impractical to model accurately, the problem has been formulated in the context of Prey Capture with the robot as a pursuer and a moving object as a passive prey. A vision-based intelligent controller has been developed and implemented in the Factory-of-the-Future Kitting Cell at Georgia Tech. The controller consists of two parts: The first part, based on the principle of fuzzy logic, guides the robot to search for an object of interest and then pursue it. The second part, an open-loop estimator built upon back-propagation neural network, predicts the target's position at which the robot executes the pick-up task. The feasibility of the concept and the control strategies were verified by two experiments. The first experiment evaluated the performance of the fuzzy logic controller for following the highly nonlinear motion of a moving object. The second experiment demonstrated that the neural network provides a fairly accurate location estimation for part pick up once the target is within the vicinity of the gripper.

The need to incorporate manufacturing and assembly considerations into the design of products is well-documented. This need also exists for recycling considerations and will only increase in importance as environmental laws get tougher and customers desire more environmentally friendly products. An important cost driver for recycling is disassembly. For this reason, designers need tools to help them determine the disassembly sequence early on in the design stage. Paper [5]addresses this issue by presenting a computer program to help solve for the preferred robotic disassembly sequences for a product. Results from the program for a single-use camera and a hand saw reciprocating mechanism are presented. Problems, improvements to the employed solution technique, and the possible use of simulated annealing as a solution technique are discussed.

An important aspect in recycling and reuse for environmentally conscious manufacturing is disassembly. Although disassembly systems contain the same basic elements as assembly system, the disassembly problem differs significantly from the assembly problem due to the fact that the incoming disassembly product is not controlled. In addition, complete dismantling of products is not necessarily required for disassembly whereas assembly builds a product completely. Paper [6] describes a model for automated disassembly that accounts for work cell interaction and used product constraints. The model provides an essential means to determine, in real- time, the next component for disassembly using the knowledge of the product design and sensor feedback minimizing the steps to remove goal components. Sets of components for removal were resolved by minimizing set-up time for disassembling the component. Given the model, a controller for product disassembly is defined that can account for missing and known replacement components. The controller can recover from unknown replacement components and jammed components when alternate removal sequences exist to meet the cell goal. Two case study examples are presented and experimentally simulated. Simulation results based on real product, vision sensor measure, and process input are presented and discussed. It is expected that the concepts demonstrated through these case studies can provide useful insights to other mechanical assemblies.

Automated Live-bird Handling

The

process of transferring live birds from conveyors to moving shackles is a

laborious, unpleasant and hazardous job.

According to the U.S. Department of Labor, poultry-processing workers

suffer disorders of the hand, wrist, and shoulder up to 16 times the

national average for private industry. It is also extremely difficult to

attract new workers to the job. Automation has a potential to improve

product quality and economic return by reducing rates of

bruising/stress-related meat downgrading.

Automation would also reduce labor costs and human health hazards.

Jointly supported by the Georgia

Agriculture Technology Research Program (ATRP), the US Poultry and Eggs

Association (USPEA) and several equipment companies, an interdisciplinary

team consisting of Georgia Tech researchers and poultry scientists from the

University of Georgia (UGA) has developed an automated live-bird transfer

system (LBTS) to mechanically transfer live birds on conveyors to moving

kill-line shackles at production speed.

The LBTS uses revolving flexible fingers to cradle the bird by its

body whilemanipulating the kinematics of both legs on a moving conveyor for

inserting them into a shackle [7-9]. Unlike handling of engineering (or

non-reactive) objects, a LBTS must be capable of accommodating a limited

range of size/shape variations and some natural reaction, and must adapt in

real time the position/orientation/posture of live birds (often in a dark

room in order to quiet the birds) without bruising the birds.

To address such a problem, several novel designs and analysis methods

were developed:

The

process of transferring live birds from conveyors to moving shackles is a

laborious, unpleasant and hazardous job.

According to the U.S. Department of Labor, poultry-processing workers

suffer disorders of the hand, wrist, and shoulder up to 16 times the

national average for private industry. It is also extremely difficult to

attract new workers to the job. Automation has a potential to improve

product quality and economic return by reducing rates of

bruising/stress-related meat downgrading.

Automation would also reduce labor costs and human health hazards.

Jointly supported by the Georgia

Agriculture Technology Research Program (ATRP), the US Poultry and Eggs

Association (USPEA) and several equipment companies, an interdisciplinary

team consisting of Georgia Tech researchers and poultry scientists from the

University of Georgia (UGA) has developed an automated live-bird transfer

system (LBTS) to mechanically transfer live birds on conveyors to moving

kill-line shackles at production speed.

The LBTS uses revolving flexible fingers to cradle the bird by its

body whilemanipulating the kinematics of both legs on a moving conveyor for

inserting them into a shackle [7-9]. Unlike handling of engineering (or

non-reactive) objects, a LBTS must be capable of accommodating a limited

range of size/shape variations and some natural reaction, and must adapt in

real time the position/orientation/posture of live birds (often in a dark

room in order to quiet the birds) without bruising the birds.

To address such a problem, several novel designs and analysis methods

were developed:

-

Several computationally efficient methods for designing compliant “robotic hands” with highly damped flexible fingers and for analyzing deformable contacts were developed; see “Compliant Systems” page for details. These methods greatly reduce the number of design configurations and live birds to be tested in experiments.

-

Live bird handling requires locating the absolute position of the object on a moving conveyor (often in a dark room in order to quiet the birds [10]). In [11], we developed a novel lateral optical sensing method for constructing the 2D profile of a live-product on a moving conveyor. Unlike most line array scanners which are designed to provide a 2D image of a static object, the proposed method utilizes an orthogonal pair of line array scanners to detect object slippage on the conveyor in real time. We illustrated numerically and experimentally the effectiveness of this sensing method with a number of practical applications involving both engineering and natural objects.

-

We developed a general method utilizing some properties observed in human visual systems to create artificial color contrast (ACC) between features so that features can be more accurately presented in color space and efficiently processed for detection; see “Machine Vision” page for details.

-

Repetitive actuation, re-orientation, and alignment are often executed mechanically for high-throughput handling. To eliminate mechanical wear and tear, a general method was developed to design energy-efficient non-contact electromagnetic (EM) actuators with high-coercive permanent magnets; see “Magnetics” page for details. This method offers closed-form solutions to characterize the magnetic field of an EM actuator.

References:

-

Lee, K-M., "Flexible Part-Feeding System for Machine Loading and Assembly, Part I: A State-of-the-art Survey," Int. J. of Production Economics. 1991, vol. 25, pp. 141-153.

-

Lee, K-M., "Flexible Part-Feeding System for Machine Loading and Assembly, Part-II: A Cost-Effective Solution," Int. J. of Production Economics. 1991, vol. 25, pp. 155-168.

-

Lee, K-M., "Design Concept of an Integrated Vision System for Cost-Effective Flexible Part-Feeding Applications," ASME J. of Engineering for Industry. November 1994, vol. 116, pp. 421-428.

-

Lee, K-M. and Y. Qian, "Intelligent Vision-Based Part-Feeding on Dynamic Pursuit of Moving Objects ," ASME J. of Manufacturing Science and Engineering (JMSE). August 1998, vol. 120, pp. 640-647.

Scheuring, J., B. Bras, and K-M. Lee, "Significance of Design for Disassembly in Integrated Disassembly and Assembly Processes," Int. J. of Environmentally Conscious Design & Manufacturing. 1994, vol. 3, no. 2, pp. 21-33.

Lee, K-M. and M. Bailey-Vankuren, "Modeling and Supervisory Control of a Disassembly Automation Workcell based on Blocking Topology," IEEE Trans. on Robotics and Automation. February 2000, vol. 16, no.1, pp. 67-77.

Lee, K-M., "Design Criteria for Developing an Automated Live-Bird Transfer System," IEEE Trans. on Robotics and Automation. August 2001, vol. 17, Issue 4, pp. 483 -490.

Webster, A. B. and K.-M. Lee, "Automated shackling: how close is it?" Poultry International, Vol. 42, Number 4, April 2003, pp.28- 36.

Lee, K-M., J. Joni, and X. Yin, "Imaging and Motion Prediction for an Automated Live-Bird Transfer Process," Proc. of the ASME Dynamic Systems and Control Division-2000, Orlando, FL, Nov. 5-10, vol. 1, pp. 181-188.

Lee, K.-M. and S. Foong, "Lateral Optical Sensor with Slip Detection for Locating Live Products on Moving Conveyor," IEEE Trans. on Automation Sci. and Eng. Jan. 2010, vol. 7, no.1, pp. 123-132.

Professor Kok-Meng Lee

George W. Woodruff School of Mechanical

Engineering

Georgia Institute of Technology

Atlanta, GA 30332-0405

Tel: (404)894-7402. Fax: (404)894-9342. Email:

kokmeng.lee@me.gatech.edu

http://www.me.gatech.edu/aimrl/